What Device Is Best For Ntp Services

UPDATE: To continue our support of this public NTP service, we have open up-sourced our drove of NTP libraries on GitHub.

Well-nigh all of the billions of devices connected to the internet have onboard clocks, which need to be accurate to properly perform their functions. Many clocks comprise inaccurate internal oscillators, which can cause seconds of inaccuracy per twenty-four hours and need to exist periodically corrected. Wrong fourth dimension can atomic number 82 to bug, such as missing an of import reminder or declining a spacecraft launch. Devices all over the earth rely on Network Fourth dimension Protocol (NTP) to stay synchronized to a more accurate clock over packet-switched, variable-latency data networks.

As Facebook'due south infrastructure has grown, time precision in our systems has get more than and more than important. We demand to know the accurate fourth dimension difference betwixt two random servers in a data center so that datastore writes don't mix upwardly the order of transactions. We demand to sync all the servers beyond many data centers with sub-millisecond precision. For that we tested chrony , a modern NTP server implementation with interesting features. During testing, nosotros found that chrony is significantly more accurate and scalable than the previously used service, ntpd, which made it an easy determination for u.s.a. to supercede ntpd in our infrastructure. Chrony also forms the foundation of our Facebook public NTP service, bachelor from time.facebook.com. In this post, we will share our work to improve accuracy from x milliseconds to 100 microseconds and how nosotros verified these results in our timing laboratory.

Leap 2nd

Before we dive into the details of our NTP service, we demand to look at a phenomenon called a bound second. Because of the Earth's rotation irregularities, we occasionally need to add together or remove a second from time, or a leap second . For humans, adding or removing a 2nd creates an almost unnoticeable hiccup when watching a clock. Servers, however, can miss a ton of transactions or events or experience a serious software malfunction when they expect fourth dimension to go forward continuously. One of the most popular approaches for addressing that is to " smear" the bound second , which ways to change the time in very small increments beyond multiple hours .

Edifice an NTP service at scale

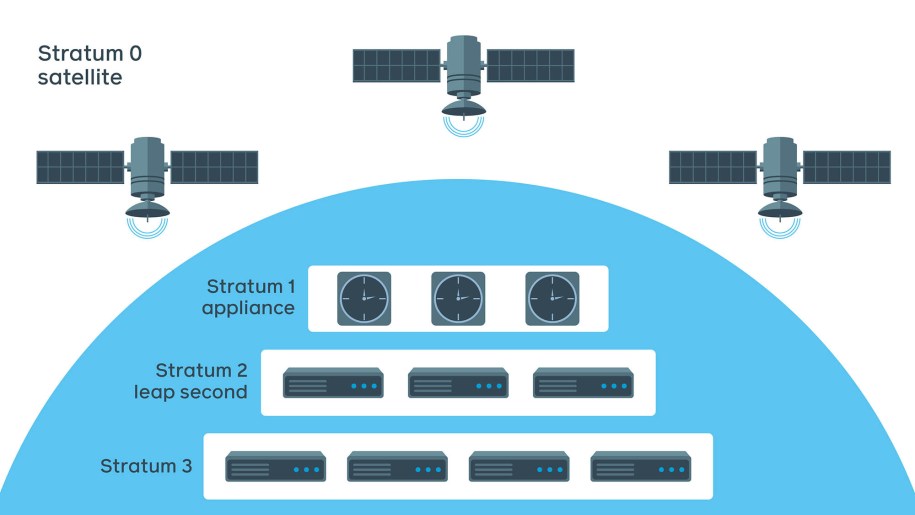

Facebook NTP service is designed in four layers, or strata:

- Stratum 0 is a layer of satellites with extremely precise atomic clocks from a global navigation satellite arrangement (GNSS), such as GPS, GLONASS , or Galileo .

- Stratum 1 is Facebook atomic clock synchronizing with a GNSS.

- Stratum ii is a pool of NTP servers synchronizing to Stratum ane devices. Leap-2nd smearing is happening at this stage.

- Stratum 3 is a tier of servers configured for a larger scale. They receive smeared time and are ignorant of leap seconds.

There could be every bit many equally 16 layers to distribute the load in some systems. The number of layers depends on the scale and precision requirements.

When we set out to build our NTP service, nosotros tested the following time daemons to utilize:

- Ntpd: A mutual daemon, ntpd used to exist included on most Unix-like operating systems. It has been a stable solution for many years and may be running on your computer right now.

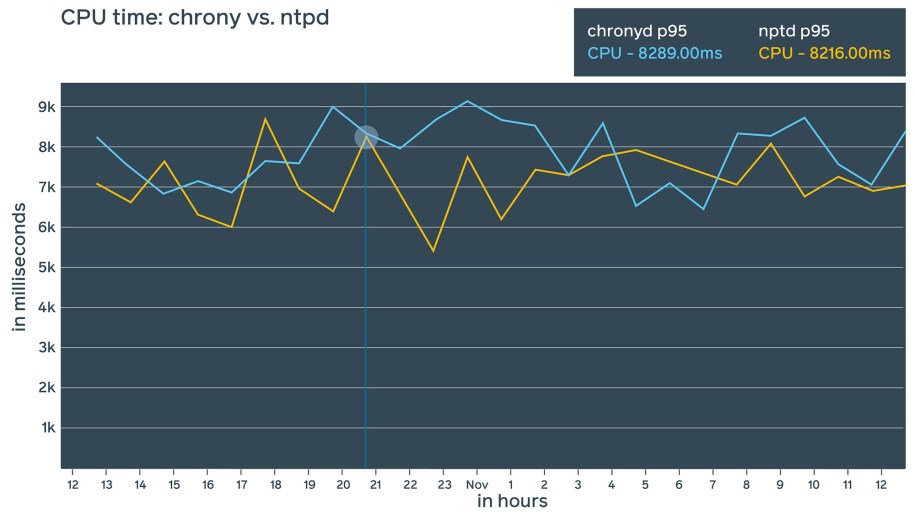

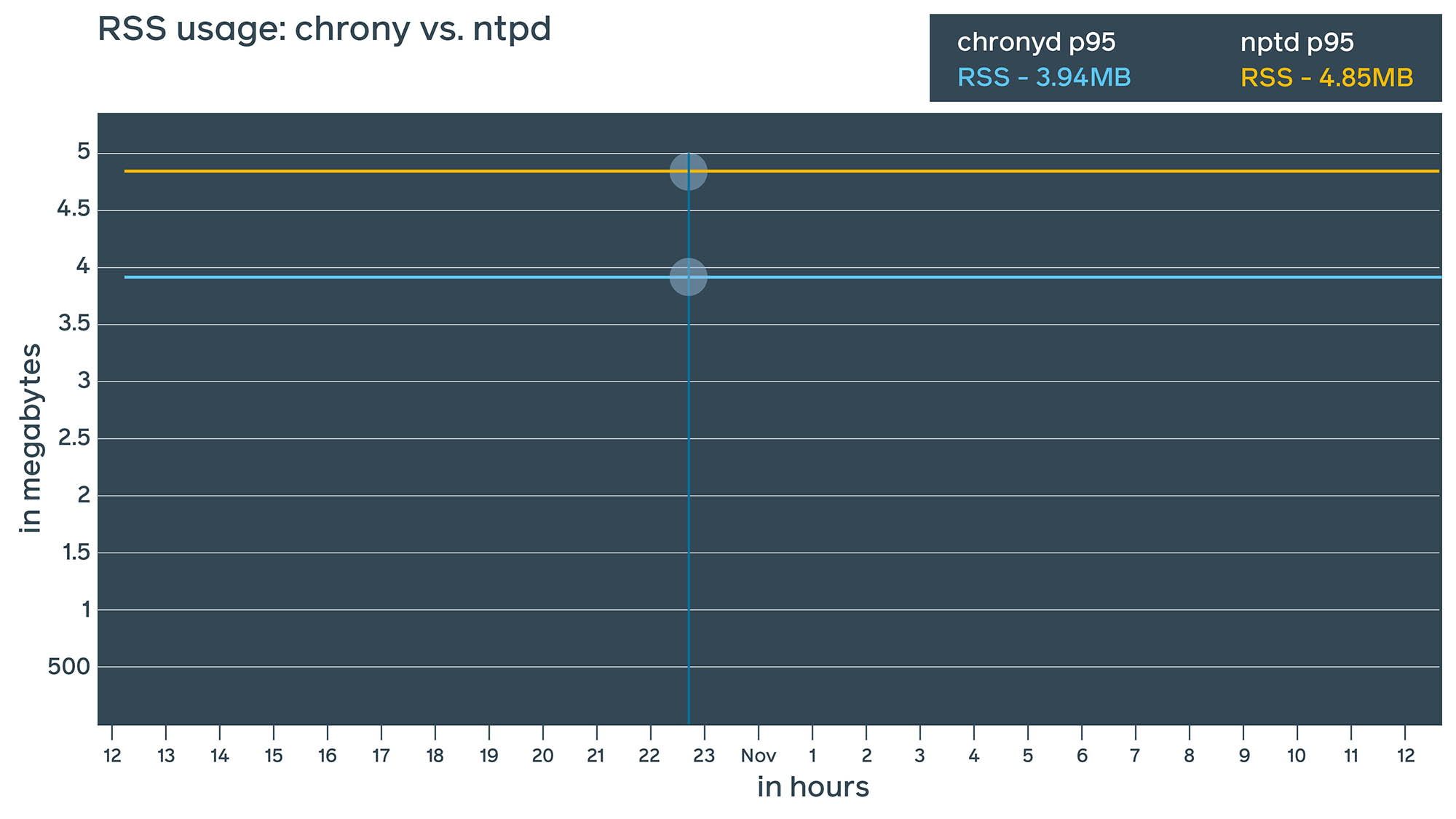

- Chrony: A fairly new daemon, chrony has interesting features and the potential to provide more precise time synchronization using NTP. Chrony also offers its own extended control protocol and theoretically could bring precision down to nanoseconds. From a resources consumption perspective, we found ntpd and chrony to be fairly similar, though chrony seems to consume slightly less RAM (~1 MiB divergence).

Daemon estimates

Whether a system is using ntpd or chrony, each provides some estimated measurements. The daemon includes command line tools that display these estimates, equally shown below. These estimates rely on many assumptions, such as:

- The network path between client and server is symmetric.

- When timestamps are added to the NTP packet and

send()is called, the operating organisation will dispatch information technology immediately. - Oscillator temperature and supplied voltage are abiding.

Ntpd includes ntpq control line tool, which displays how out of sync it determines that time is:

[user@client ~]# ntpq -p remote refid st t when poll reach delay start jitter ========================================================================= +server1 .FB. 2 u 406 1024 377 0.029 0.149 0.035 +server2 .FB. ii u 548 1024 377 0.078 0.035 0.083 *server3 .FB. 2 u 460 1024 377 0.049 -0.185 0.114 However, can we trust these numbers? If ntpd reports that the time is off by 0.185 ms, is that accurate? The short answer is no. Server estimates the offset based on multiple timestamps in a parcel. And the bodily values are supposed to exist within a 10x bigger window. In other words, a result proverb time is off by 0.185 ms ways it'south probably inside +/-2 ms (so, 4 ms in total). However, our testing demonstrated that accurateness is generally inside ten ms with ntpd.

We had technical requirements for better precision. For instance, multi-master databases translate microseconds and even nanoseconds of precision directly into their theoretical throughput. Another example for which moderate precision is required is logging — in order to match logs between nodes of a distributed system, milliseconds of precision is ofttimes required.

Let's see what happens if we supervene upon ntpd with chrony:

[user@client ~]# chronyc sources -five 210 Number of sources = nineteen .-- Source manner '^' = server, '=' = peer, '#' = local clock. / .- Source state '*' = electric current synced, '+' = combined , '-' = not combined, | / '?' = unreachable, '10' = time may be in error, '~' = fourth dimension too variable. || .- xxxx [ yyyy ] +/- zzzz || Reachability register (octal) -. | xxxx = adjusted get-go, || Log2(Polling interval) --. | | yyyy = measured get-go, || \ | | zzzz = estimated error. || | | \ MS Proper name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^+ server1 ii 6 377 2 +10us[ +10us] +/- 481us ^- server2 ii 6 377 0 -4804us[-4804us] +/- 77ms ^* server3 2 vi 377 59 -42us[ -46us] +/- 312us ^+ server4 3 6 377 threescore +11ns[-3913ns] +/- 193us Pay attending to those concluding three numbers. Backwards, from correct to left:

- The very last number is the estimated fault. Information technology's prefixed with +/-. This is how much chrony evaluates the maximum fault. Sometimes it's 10s of milliseconds, sometimes it's 100s of microseconds (100x difference). This is because when chrony synchronizes with some other chrony, it uses the extended NTP protocol , which dramatically increases precision. Non too bad!

- Side by side is the number in the square brackets. It shows the measured beginning. We can come across approximately 100x deviation here as well, except for server4 (we will talk about this i later).

- The number to the left of the square brackets shows the original measurement, adjusted to allow for whatsoever slews applied to the local clock since that commencement measurement. Once more, we can run across 100x divergence between ntpd and chrony.

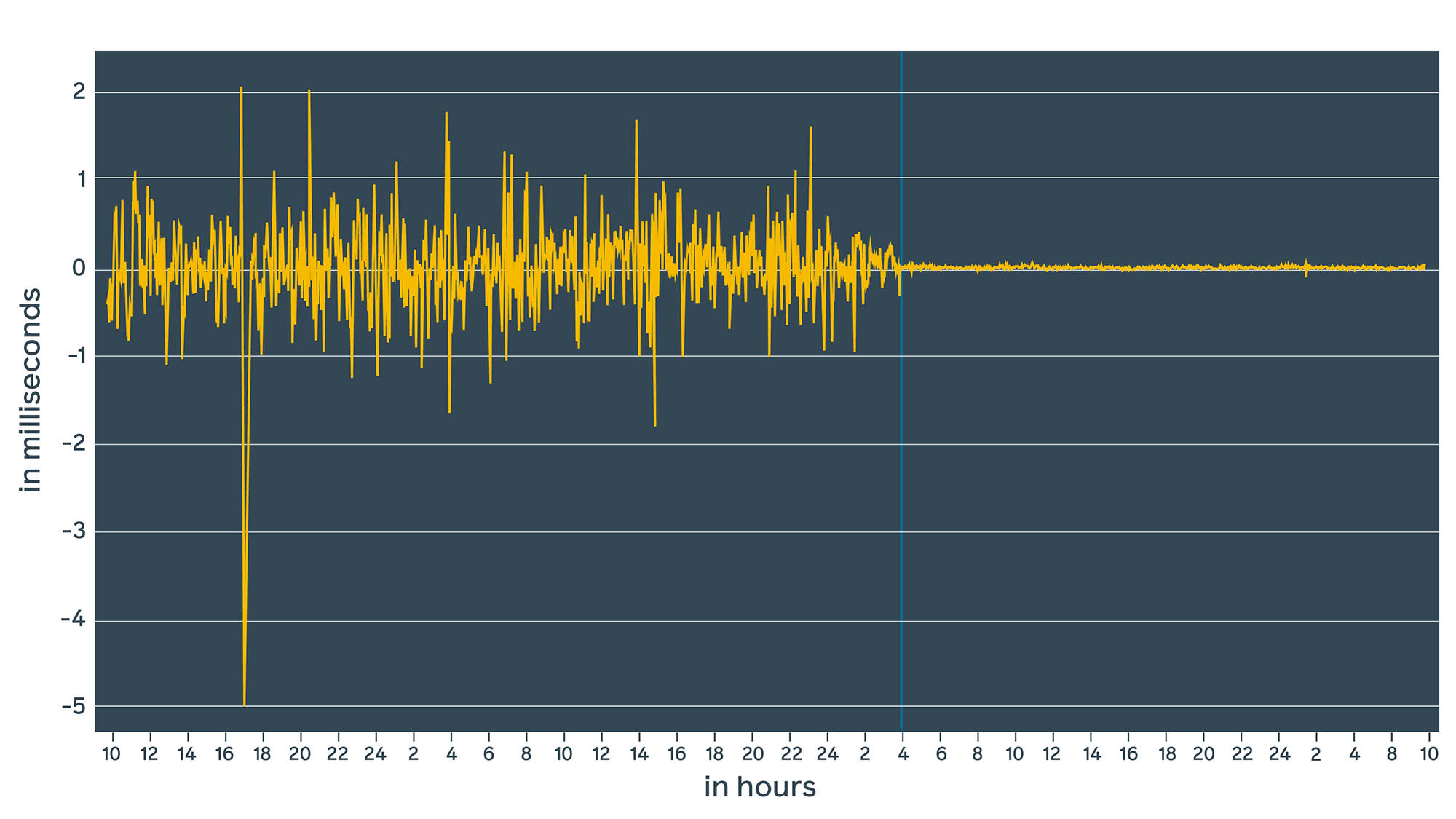

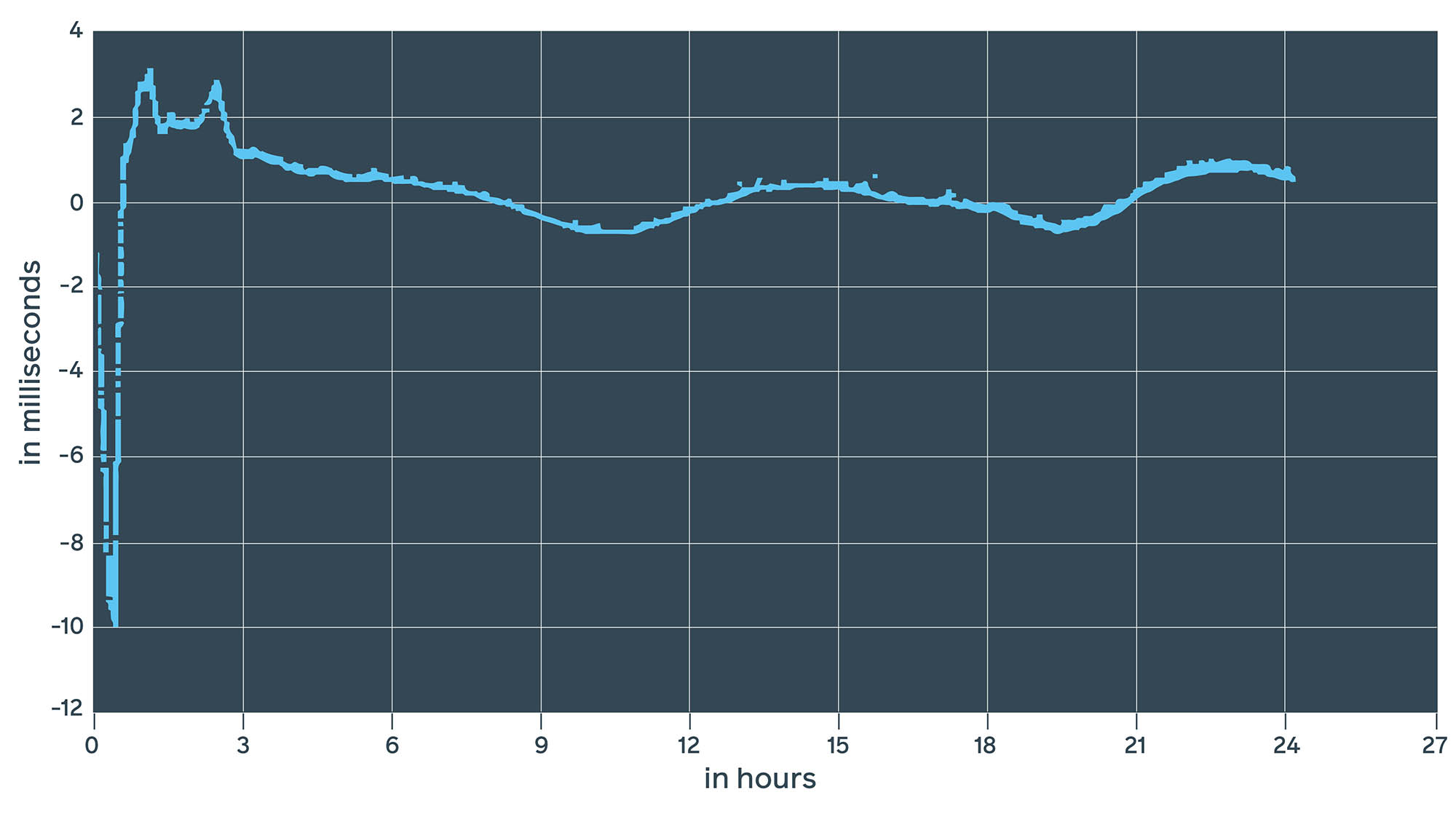

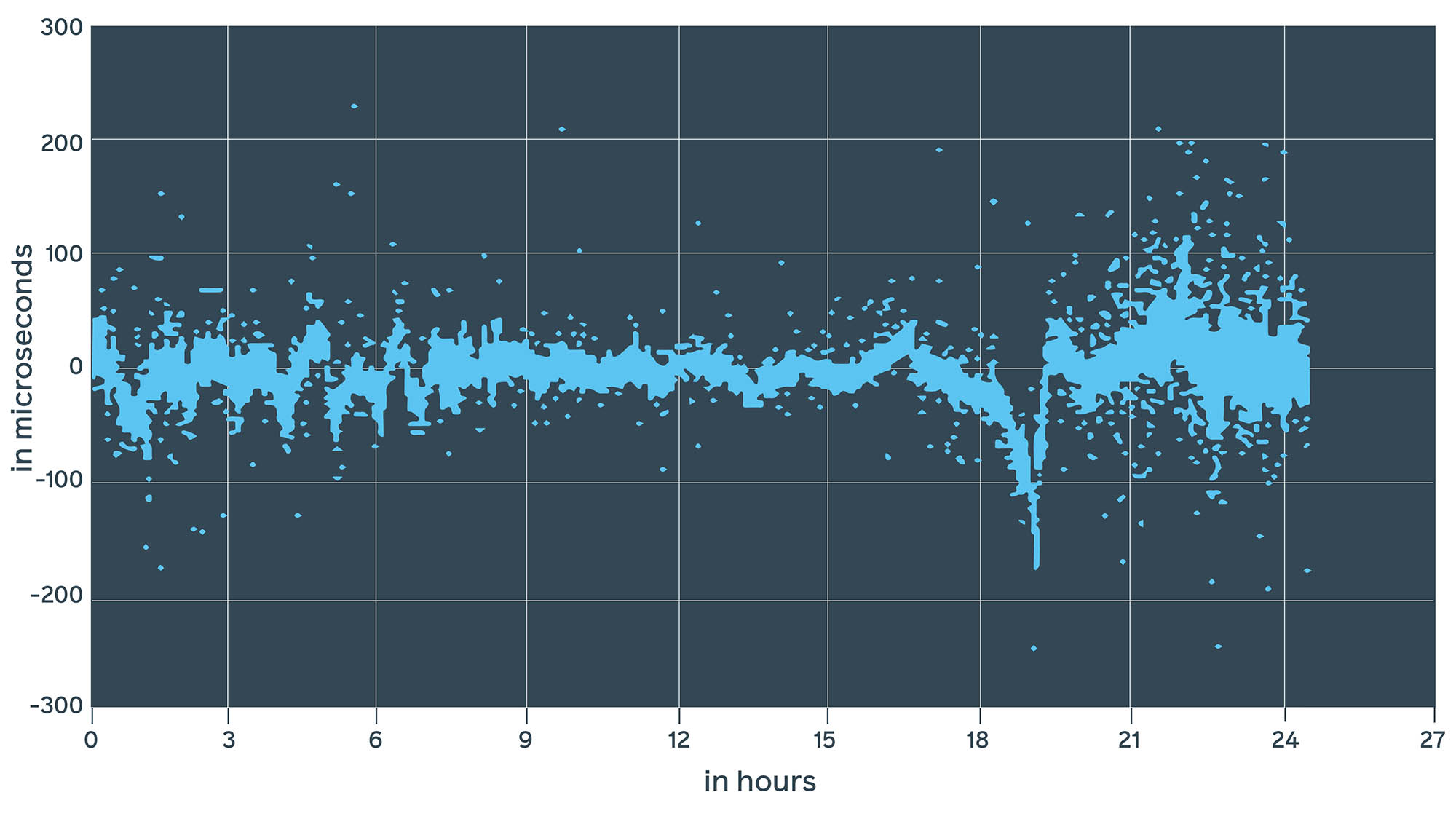

Let'south see how it looks in the graph:

The vertical blue line represents the moment when ntpd was replaced with chrony. With ntpd, we were in the range of +/-1.v ms. With chrony, we are in the range of microseconds. More chiefly, estimated error (window) dropped to a range of 100s of microseconds, which we can ostend with lab measurements (more on this beneath). Yet, these values are estimated by daemons. In reality, the actual fourth dimension delta might be completely unlike. How tin we validate these numbers?

Pulse per second (1PPS)

We can excerpt an analog signal from the atomic clock (really the internal timing circuitry from a Stratum one device). This signal is called 1PPS, which stands for 1 pulse per 2nd; it generates a pulse over the coaxial cable at the beginning of every second. It is a popular and accurate method of synchronization. We tin can generate the aforementioned pulse from an NTP server and then compare to run across how off the phases are. The challenge here is that not all servers support 1PPS, so this requires specialized network extension cards.

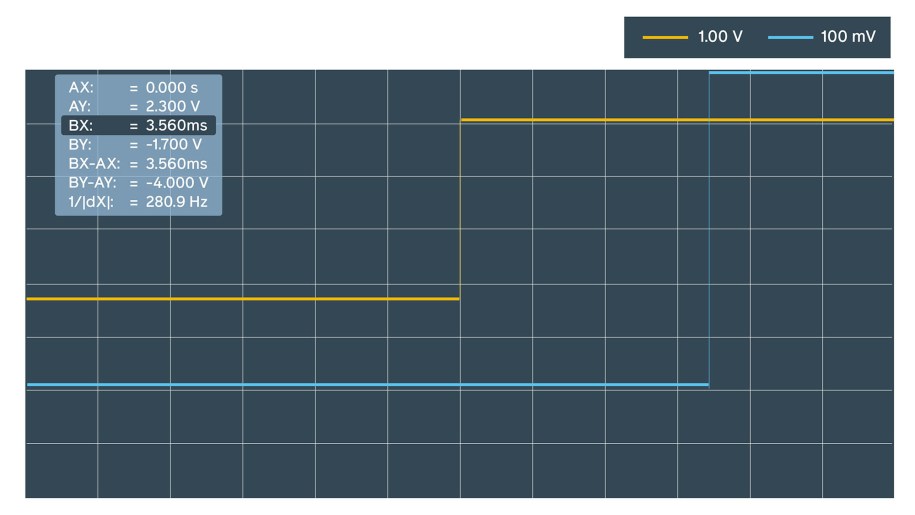

Our first attempt was pretty much manual. It involved an oscilloscope to display a phase shift.

Within 10 minutes of measurements nosotros estimated the offset to exist approximately 3.5 ms for ntpd, sometimes jumping to 10 ms.

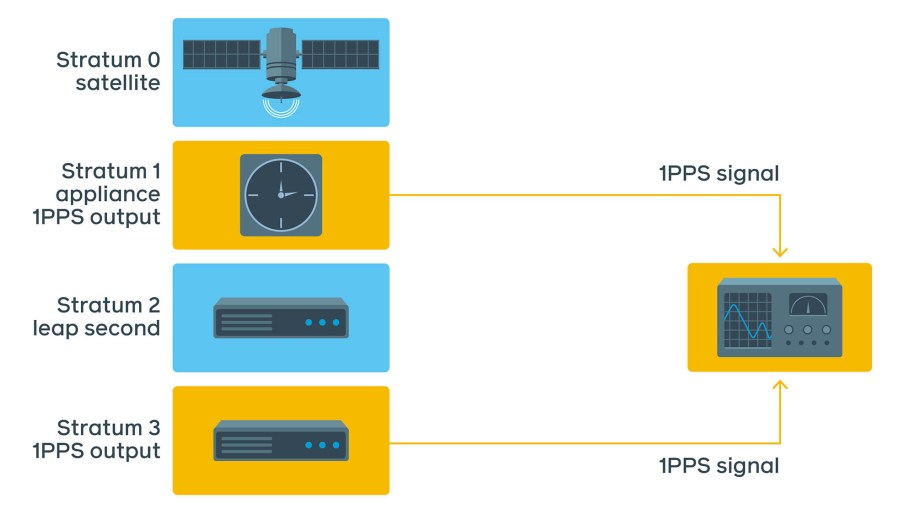

This examination would be extremely hard and impractical to implement at Facebook calibration. A better test involves connecting 1PPS output of the test server dorsum into 1PPS input of the Stratum 1 device itself and monitoring the deviation.

This method has all the upsides of the previous 1PPS measurements but without the downsides of operating an oscilloscope in a information center. As this test runs continuously, we are able to take measurements and verify the real NTP showtime at any signal in fourth dimension.

These two measurements requite u.s.a. a very skillful understanding of a real NTP beginning with a very small estimated error by 1PPS in just nanoseconds, more often than not added past the cable length.

The disadvantages of these methods are:

- Cabling: Coaxial cable is required for such measurements. Doing spot checks in different data centers requires changes in the data center design, which is challenging.

- Custom hardware: Not all network cards have 1PPS outputs. Tests like these require unique network cards and servers.

- Stratum 1 device with 1PPS input.

- 1PPS software on the server: To run the test, we had to install ntpd on our test server. This daemon adds extra fault equally information technology works in user infinite and is scheduled by Linux.

Dedicated testing device

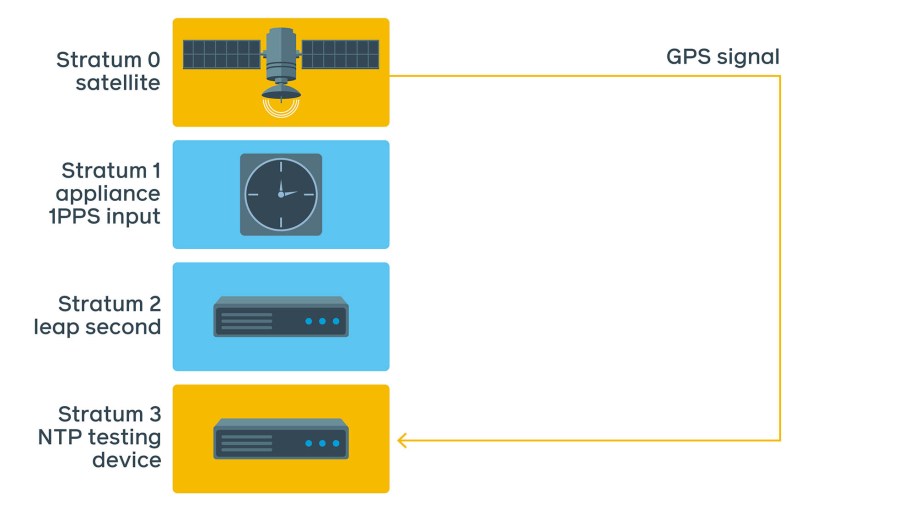

There are devices on the marketplace that can perform accuracy checks. They contain GNSS receivers, have an atomic clock, have multiple 1PPS and network interfaces, and also act as NTP clients. This allows us to perform the aforementioned checks using NTP protocol directly. The received NTP packet is recorded with a very accurate timestamp from the atomic clock/GNSS receiver.

Here is the typical setup:

Our initial ad-hoc test setup is shown to a higher place. In that location are several advantages when measuring with this device:

- It does not require extra 1PPS cabling. Nosotros even so need to discipline the atomic clock, but this can be done using GNSS or the Stratum 1 device itself and a brusk cable when in the same rack.

- Information technology uses the atomic clock's data to postage transmitted and received network packets. This makes the operating arrangement's influence negligible, with the error rate in nanoseconds.

- Information technology supports both NTP and PTP (Precision Time Protocol).

- The device is portable — we tin move it between locations to perform spot checks.

- The device uses its ain format for data points, but information technology can export data to CSV, which enables united states of america to export data to our internal data standard.

NTPD measurements

This looks pretty similar to what nosotros've seen with daemon estimates and 1PPS measurements. At kickoff, we encounter a 10 ms driblet, which is slowly corrected to +/-1 ms. Interestingly enough, this 10 ms drop is pretty persistent and repeats after every restart.

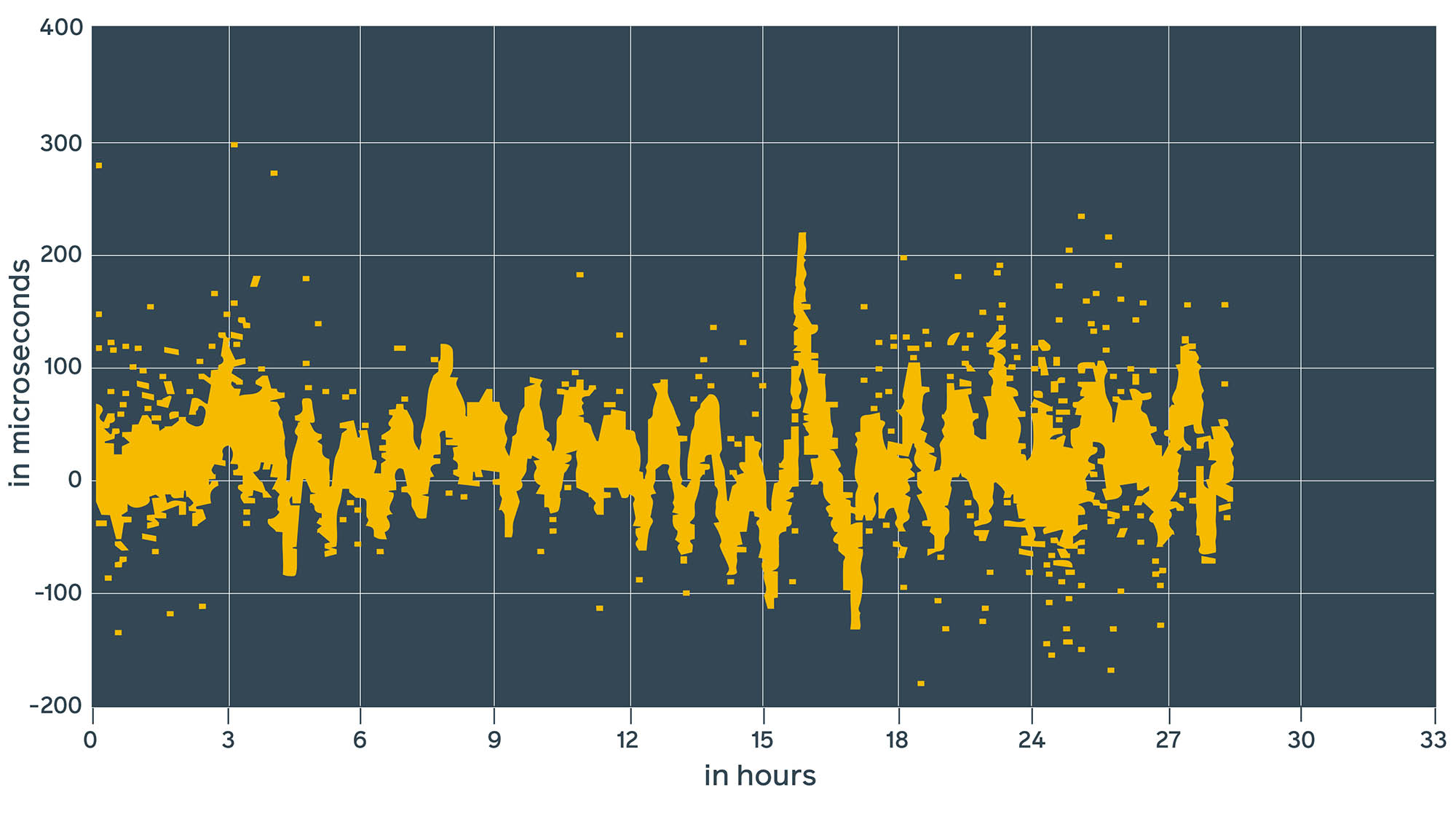

Chrony measurements

This looks pretty similar to the chrony daemon estimates. It's 10 to 100 times better than ntpd.

Chrony with hardware timestamps

Chrony has greatly improved the starting time, which can be seen from the estimated 1PPS and lab device values. Merely there is more: Chrony supports hardware timestamps. According to the documentation , it claims to bring accuracy to inside hundreds of nanoseconds.

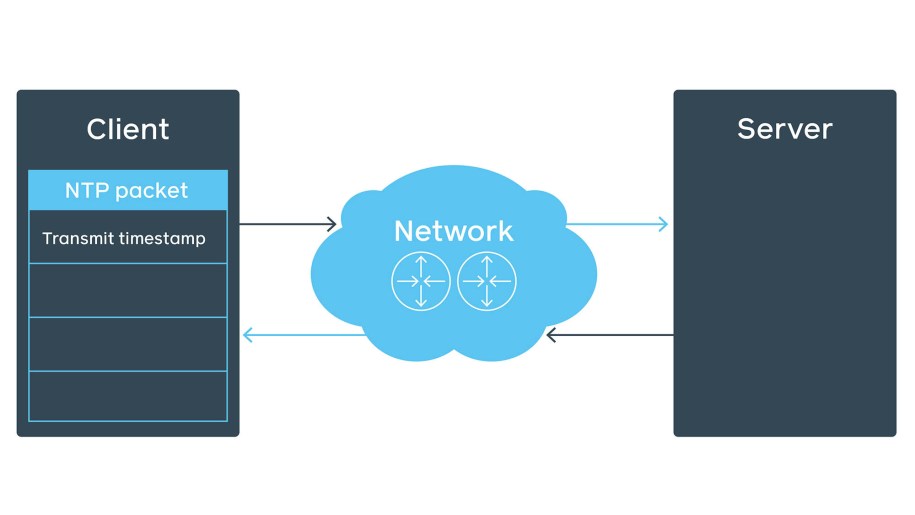

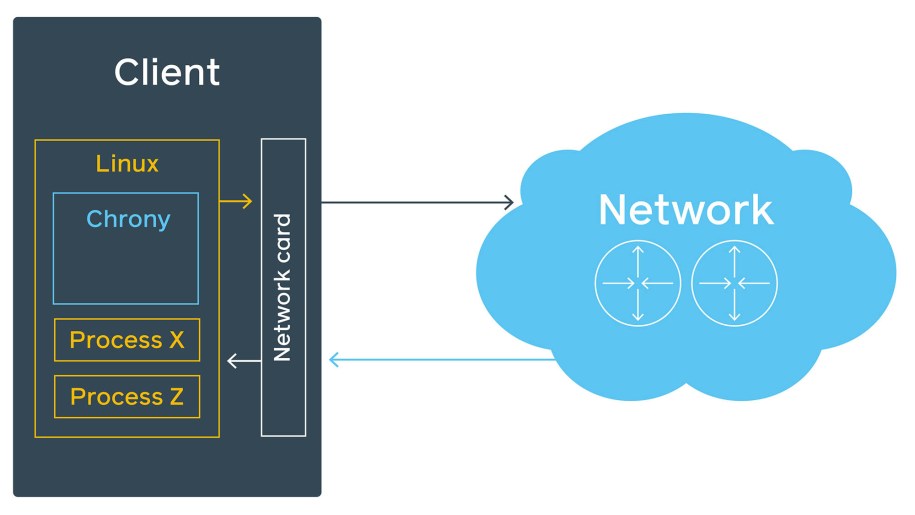

Let's look at the NTP parcel structure in the NTP client-server advice over network. The initial client's NTP packet contains the transmit timestamp field.

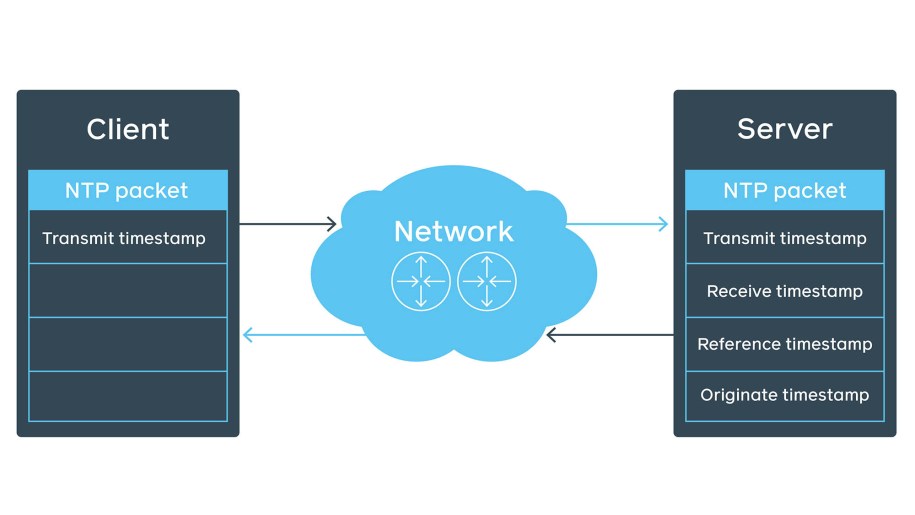

The server fills the rest of the fields (e.yard., receive timestamp), preserves the client's transmit timestamp as originate timestamp, and sends it back to the client.

This behavior tin can be verified using tcpdump:

08:37:31.489921 IP6 (hlim 127, adjacent-header UDP (17) payload length: 56) client.36915 > time1.facebook.com.ntp: [bad udp cksum 0xf5d2 -> 0x595e!] NTPv4, length 48 Client, Jump indicator: clock unsynchronized (192), Stratum 0 (unspecified), poll three (8s), precision -vi Root Delay: 1.000000, Root dispersion: 1.000000, Reference-ID: (unspec) Reference Timestamp: 0.000000000 Originator Timestamp: 0.000000000 Receive Timestamp: 0.000000000 Transmit Timestamp: 3783170251.489887309 (2019/11/xix 08:37:31) Originator - Receive Timestamp: 0.000000000 Originator - Transmit Timestamp: 3783170251.489887309 (2019/xi/19 08:37:31) ... 08:37:31.490923 IP6 (hlim 52, next-header UDP (17) payload length: 56) time1.facebook.com.ntp > server.36915: [udp sum ok] NTPv4, length 48 Server, Spring indicator: (0), Stratum 1 (primary reference), poll iii (8s), precision -32 Root Delay: 0.000000, Root dispersion: 0.000152, Reference-ID: FB Reference Timestamp: 3783169800.000000000 (2019/11/xix 08:30:00) Originator Timestamp: 3783170251.489887309 (2019/11/19 08:37:31) Receive Timestamp: 3783170251.490613035 (2019/11/19 08:37:31) Transmit Timestamp: 3783170251.490631213 (2019/11/19 08:37:31) Originator - Receive Timestamp: +0.000725725 Originator - Transmit Timestamp: +0.000743903 ... The client will get the package, append even so another receive timestamp, and calculate the first using the following formula from the NTP RFC #958:

c = (t2 - t1 + t3 - t4)/2

However, Linux is not a real-time operating system, and it has more than than 1 process to run. So, when the transmit timestamp is filled and Write() is called, there is no guarantee that data is sent over the network immediately:

Additionally, if the machine does not have much traffic, an ARP request might be required before sending the NTP packet. This all stays unaccountable and gets into estimated error. Luckily, chrony supports hardware timestamps. With these, chrony on the other end can determine with high precision when the parcel was processed by the network interface. There is still a delay between the moment the network card stamps the packet and when it actually leaves information technology, but it's less than x ns.

Retrieve this chronyc sources output from before?

^+ server4 2 6 377 60 +11ns[-3913ns] +/- 193us Chrony reports an kickoff of 11 ns. This is the result of hardware timestamps beingness enabled on involved parties. Nevertheless, the estimated error is in the range of hundreds of microseconds. Not every network carte supports hardware timestamps, but they are becoming more than popular, so chances are the network card already supports them.

To verify support for hardware timestamps, simply run the ethtool check and so you can see hardware-transmit and hardware-receive capabilities.

ethtool -T eth0 Time stamping parameters for eth0: Capabilities: hardware-transmit (SOF_TIMESTAMPING_TX_HARDWARE) hardware-receive (SOF_TIMESTAMPING_RX_HARDWARE) hardware-raw-clock (SOF_TIMESTAMPING_RAW_HARDWARE)

Past using the lab device to exam the same hardware, nosotros were able to achieve dissimilar results, where ntpd showed between -10 ms and three ms (13 ms divergence), chrony showed betwixt -200 µs and 200 µs, and in almost cases, chrony with enabled hardware timestamps showed betwixt -100 µs and 100 µs. This confirms that daemon estimates might exist inaccurate.

Public services

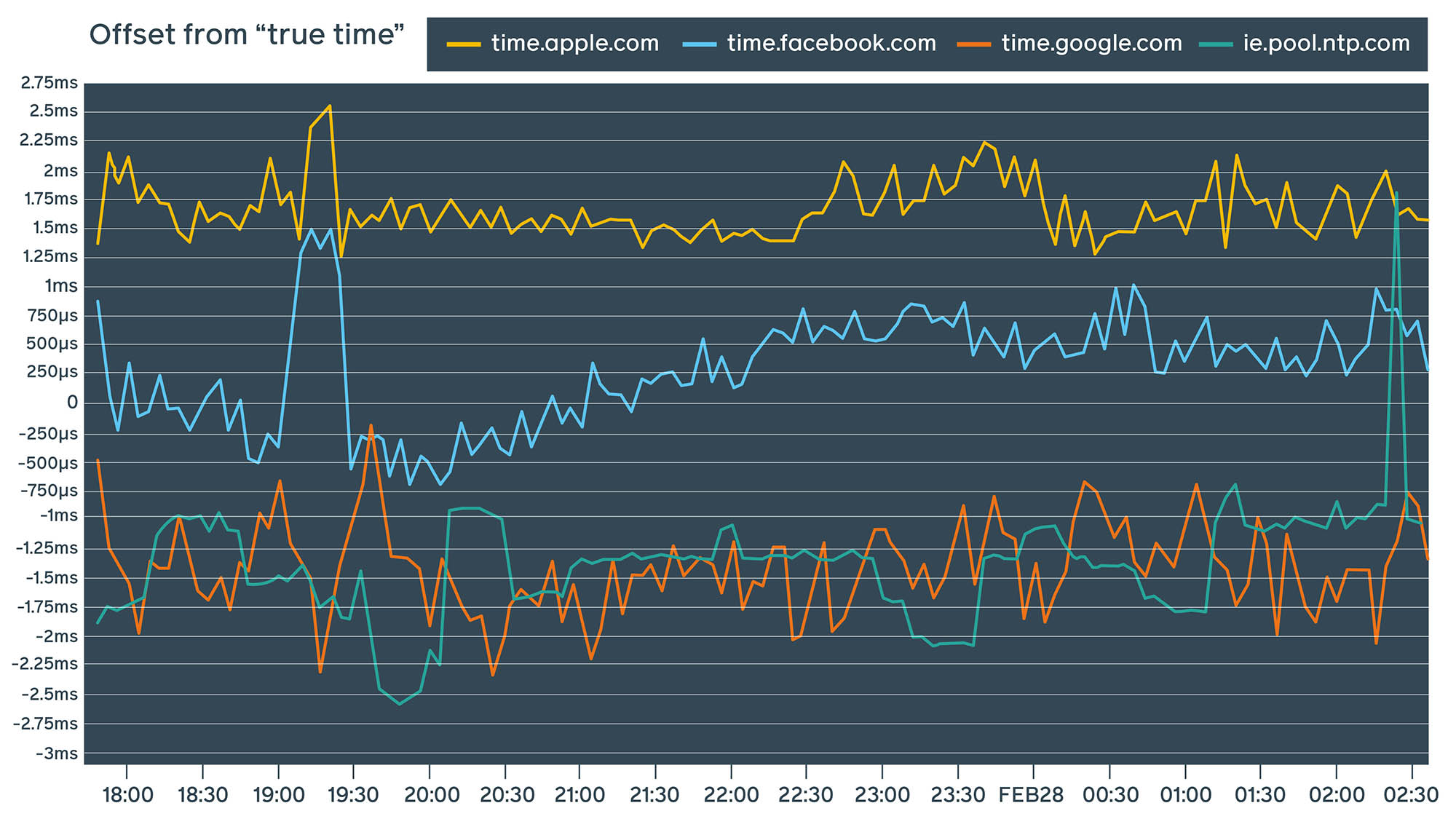

All these measurements were done in our internal controlled data center networks. Let's run across what happens when we run this device against our public NTP service and some other well-known public NTP service providers:

The results of these measurements depend heavily on the network path also every bit the speed and quality of the network connection. These measurements were performed multiple times against services from different locations and across a diverseness of Wi-Fi and LAN networks non associated with Facebook. We tin see that non merely is our public NTP endpoint competitive with other popular providers, but in some cases, it likewise outperforms them.

Public NTP design decisions

In one case we succeeded in bringing internal precision to the sub-millisecond level, we launched a public NTP service, which tin can be used by setting time.facebook.com as the NTP server. We run this public NTP service out of our network PoPs (points of presence). To preserve privacy, we practice not fingerprint devices by IP address. Having five independent geographically distributed endpoints helps u.s.a. provide better service — fifty-fifty in the upshot of a network path failure. So nosotros provide 5 endpoints:

- time1.facebook.com

- time2.facebook.com

- time3.facebook.com

- time4.facebook.com

- time5.facebook.com

Each of these endpoints terminates in a different geographic location, which has a positive effect on both reliability and time precision.

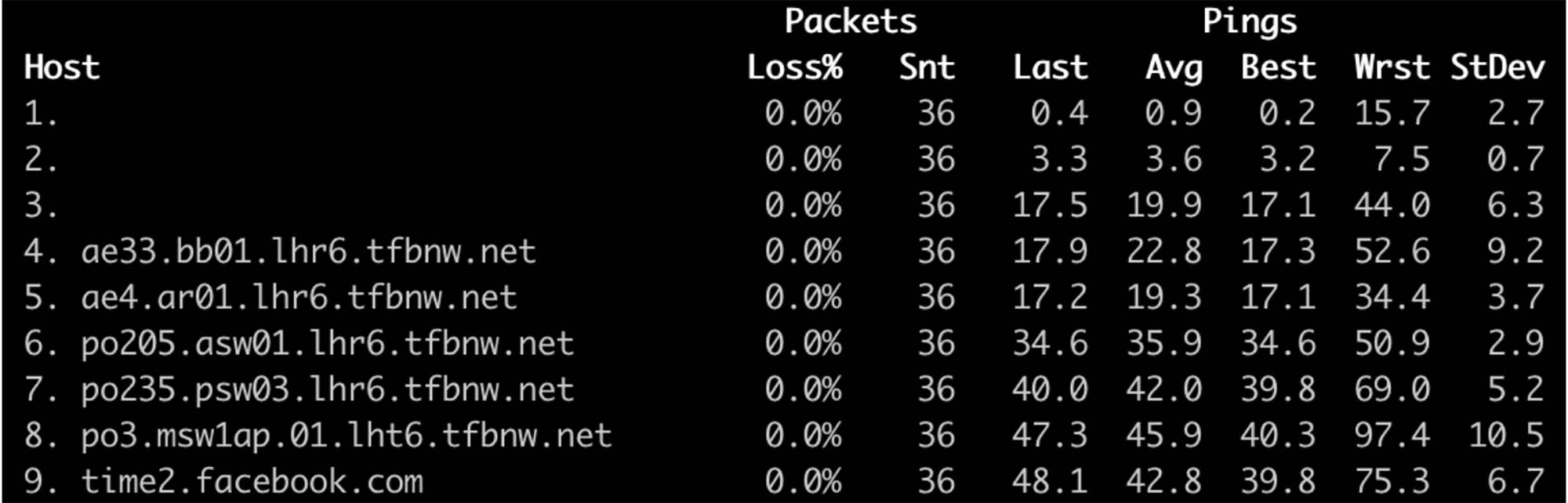

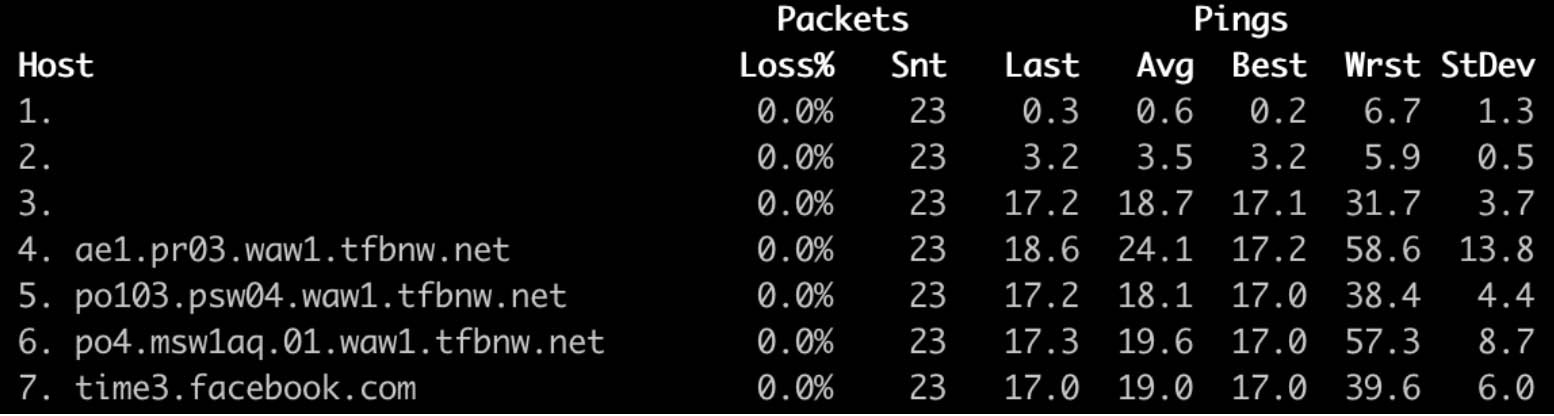

time2.facebook.com network path:

time3.facebook.com network path:

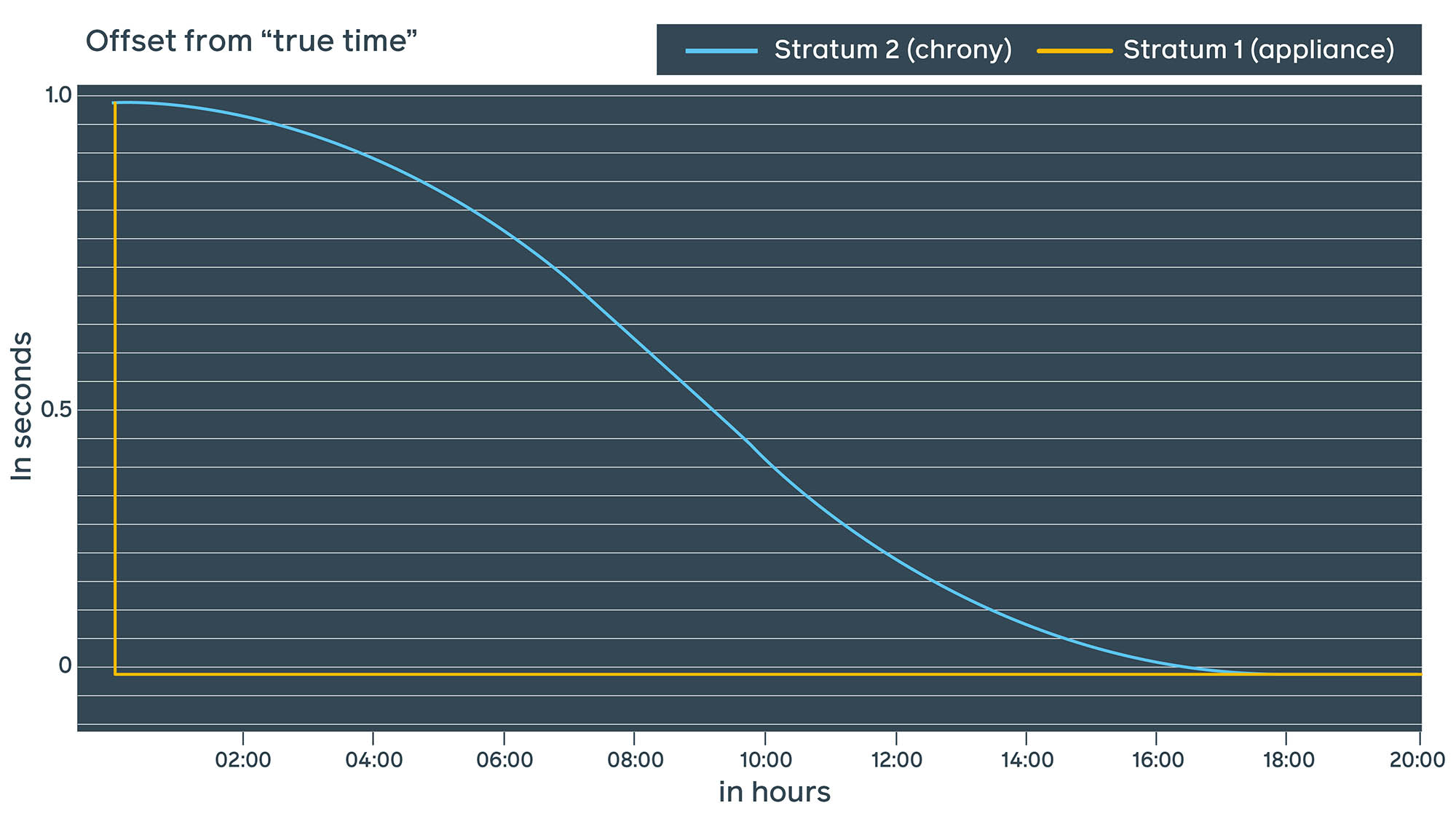

Jump-2nd smearing

Ntpd uses a bound-seconds.list file that's published in advance. Using this file, smearing tin start early and the time will exist correct past the time the leap second really occurs.

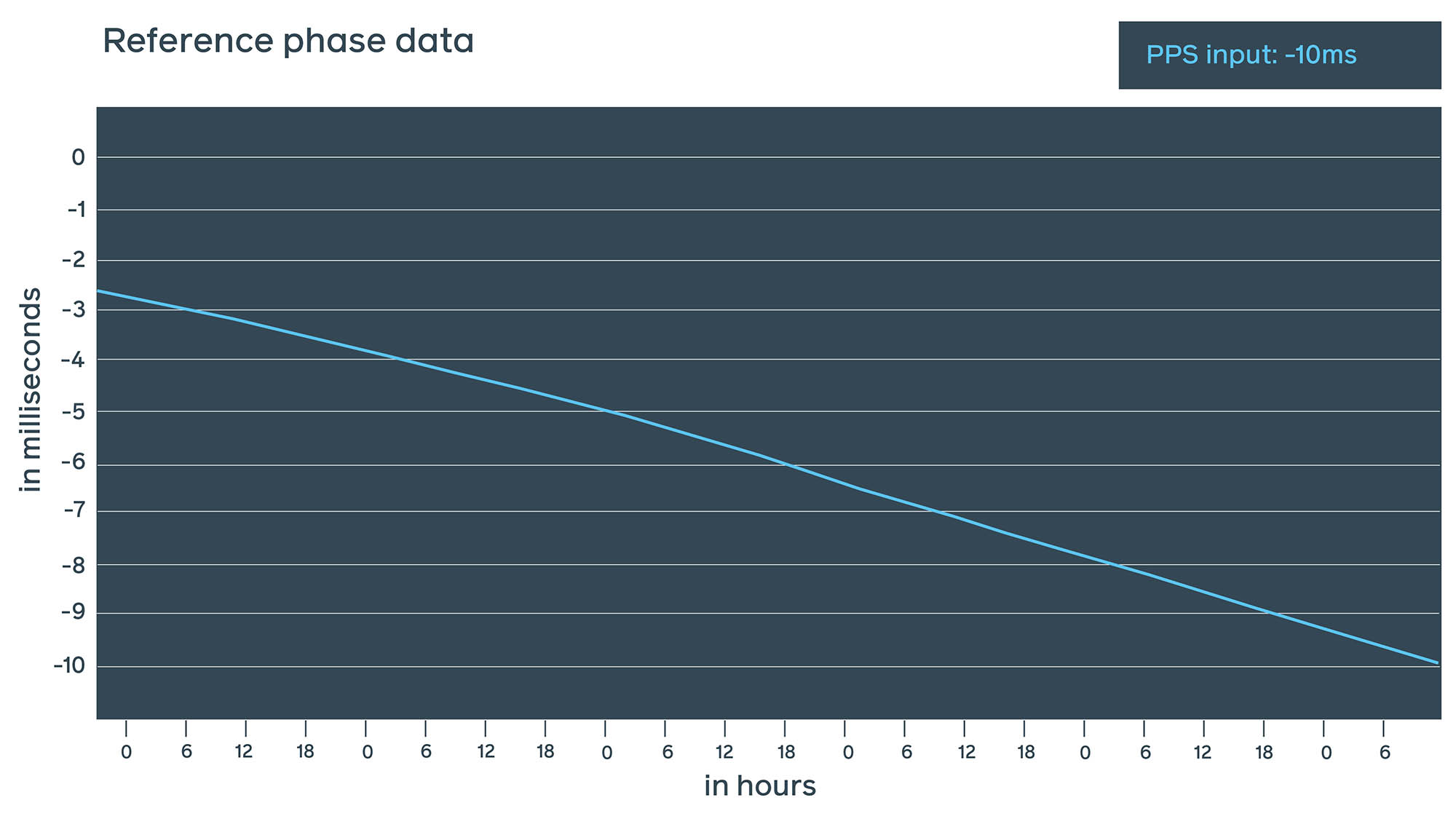

Chrony relies on the leap-second indicator published by GNSS hours in advance. When the jump second result actually happens at 00:00 UTC, it starts to smear throughout a specified time flow. With Facebook public NTP, we decided to go with the chrony approach and smear the jump second subsequently the event over approximately 18 hours.

As smearing happens on many Stratum 2 servers at the same time, it's important for the steps to exist as similar as possible. Smoother sine-curve smearing is besides safer for the applications.

What we've learned

Measuring time is challenging. Both ntpd and chrony provide estimates that are somewhat truthful. In full general, if you want to monitor the real offset, we recommend using 1PPS or external devices with GNSS receivers and an atomic clock.

In comparing ntpd with chrony, our measurements indicate that chrony is far more precise, which is why we've migrated our infra to chrony and launched a public NTP service. We've found that the effort to drift is worth the immediate improvement in precision from tens of milliseconds to hundreds of microseconds.

Using hardware timestamps can farther improve precision past two orders of magnitude. Despite its improvements, NTP has its ain limitations, and then evaluating PTP can accept your precision to the adjacent level.

Nosotros'd like to thank Andrei Lukovenko, Alexander Bulimov, Rok Papez, and Yaroslav Kolomiiets for their support on this project.

Source: https://engineering.fb.com/2020/03/18/production-engineering/ntp-service/

Posted by: williamsvanctiod.blogspot.com

0 Response to "What Device Is Best For Ntp Services"

Post a Comment